As organizations race to implement AI agents, many are building Model Context Protocol (MCP) servers to mediate between Large Language Models (LLMs) and external tools and resources. Among the most crucial challenges in deploying MCP servers is security: the non-deterministic input and output of LLMs create agentic-specific risks such as LLM prompt injection, data exfiltration, execution environment risks, and more.

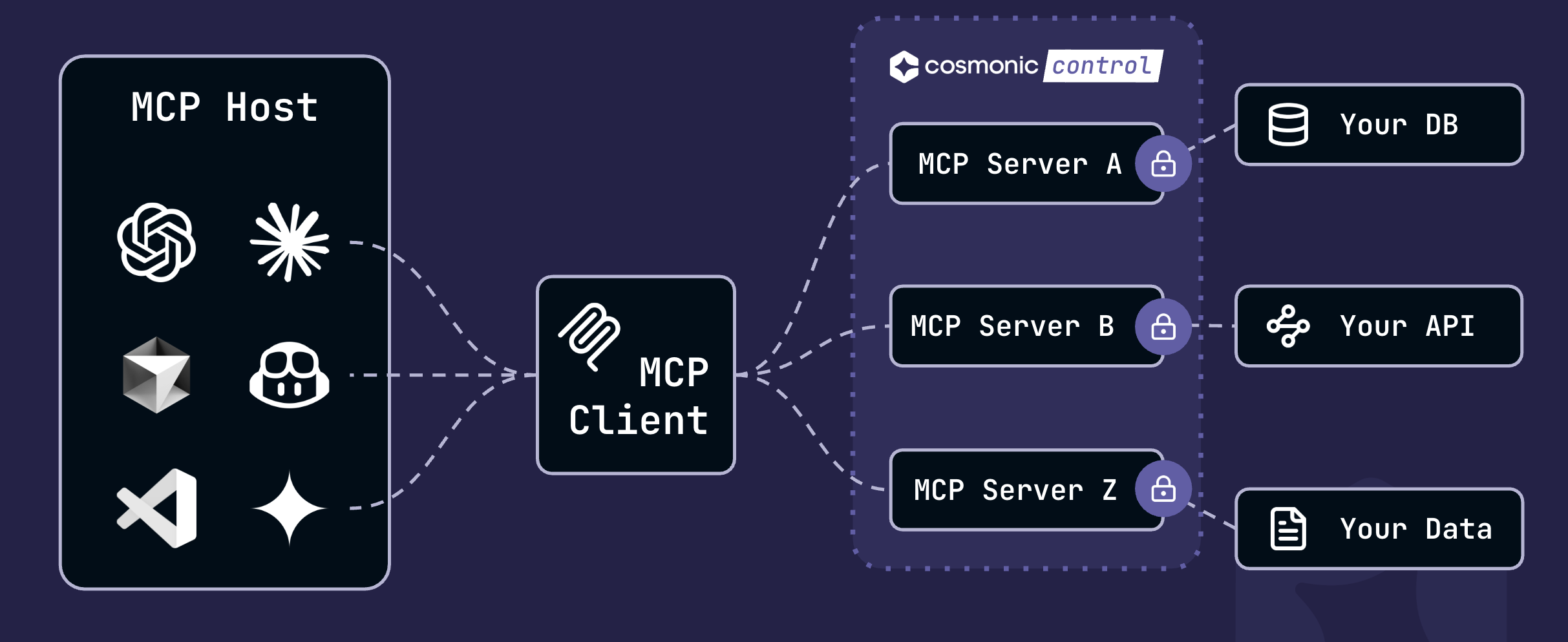

WebAssembly (Wasm) components provide new real-time security controls to address the MCP security problem. Wasm component binaries are portable, polyglot sandboxes that interact with the outside world via explicitly enabled, language-agnostic interfaces. When MCP servers are compiled to Wasm, they can be deployed with the confidence that agents can only interact with approved tools and resources in approved ways.

In this blog, we'll examine patterns for remote-hosting sandboxed MCP servers, explain how Wasm helps to mitigate security risks associated with AI agent integration, and demonstrate how to deploy a sandboxed MCP server with Wasm using Kubernetes and Cosmonic Control.

Understanding Remote MCP Servers

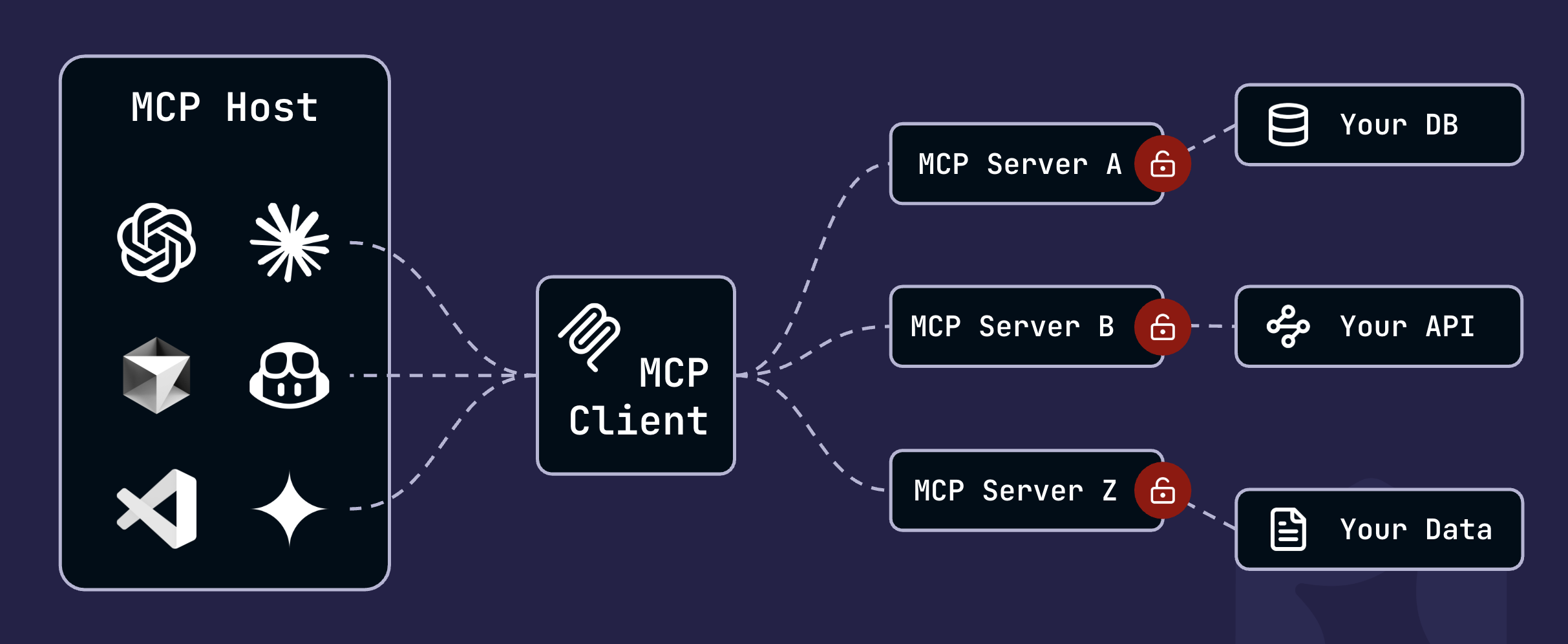

The Model Context Protocol (MCP) is an open protocol introduced by Anthropic that has swiftly emerged as the de facto standard for LLM and agent extensions. In order to extend models' capabilities, MCP adopts a client-host-server architecture:

- MCP hosts: Models, AI applications, or assistants such as Claude, ChatGPT, Copilot, and so on.

- MCP servers: Servers that expose capabilities to MCP hosts via functions called Tools, structured data called Resources, and prompt templates.

- MCP clients: Applications that handle communication—such as authorization and data requests—between MCP hosts and MCP servers.

For an idea of how these pieces fit together, you might create an MCP server that connects to a weather API, enabling you to query ChatGPT about weather alerts available via the API.

If you're building an MCP server for your own use, the server might well be hosted locally, but for enterprise purposes, servers are increasingly deployed as remote MCP servers, meaning that they are available and accessible on the Internet.

For these remote MCP servers—especially those dealing with sensitive data like financial information or medical records—users might go through a standard authorization flow and give the necessary permissions to MCP clients.

MCP servers mediate information from potentially secure APIs, databases, and resources, and traditional containerized infrastructure provides a broad attack surface for exploiting agentic AI workflows.

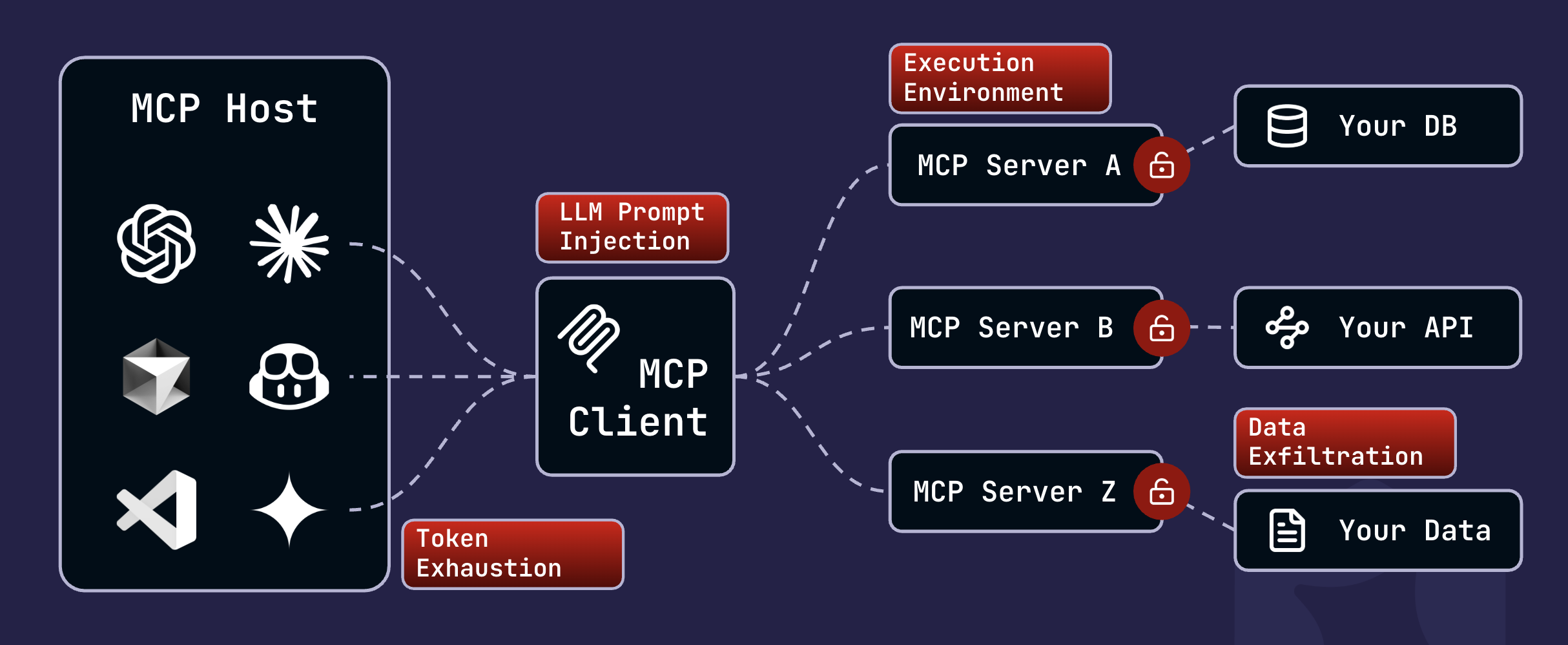

Novel Security Risks of MCP and Agentic AI

MCP servers play a crucial role in extending models' capabilities. OWASP's Top 10 for LLM and GenAI Applications outlines the most severe risks:

- LLM prompt injection can exploit unintended capabilities via an MCP server's functions, data, or prompt templates, including command injection, code injection, remote code execution, and more.

- MCP hosts may exfiltrate sensitive data, whether inadvertently or as the result of attacks such as prompt injections.

- Modules may be abused to consume excessive resources and result in token exhaustion.

- The execution environment: becomes a space with novel risks, including the prospect of an MCP server acting on internal networks, exploiting trusted network paths, and executing malicious cross-tenant/cross-context activity.

Fortunately, these risks can be addressed and mitigated by using Wasm as a sandbox for MCP servers.

Why Wasm?

A Wasm component binary is bytecode that runs on the highly efficient virtual architecture of a Wasm runtime. The design and unique characteristics of Wasm components make them well-suited to sandbox remote MCP servers:

- Because Wasm components are highly portable, they can be deployed anywhere from the cloud to the edge using wasmCloud from the Cloud Native Computing Foundation (CNCF), the open source platform at the heart of Cosmonic Control.

- Component interfaces are virtualizable. For example, a virtual file system for

wasi-filesystemmay be used, allowing users to strictly control filesystem visibility and I/O behavior, preventing unauthorized access to sensitive resources. - Wasm components can only interact with the outside world via explicitly granted capabilities.

An MCP component can be configured to respond to incoming HTTP requests from an explicitly defined entity, but it cannot be invoked in any other way. Wasm components give organizations a way to isolate MCP servers with standard cloud native security controls and network-level boundaries around agentic capabilities.

Let's take a look at what the deployment of a Wasm-sandboxed MCP server looks like in practice.

Demo: Deploy an MCP Server to Cosmonic Control in a Wasm Sandbox

If you'd like to learn how to quickly generate an MCP Server from an existing OpenAPI schema and compile it to a sandboxed Wasm binary, check out our blog, Generate Sandboxed MCP Servers with Wasm Shell and OpenAPI2MCP.

For this deployment example, you'll just need some standard Kubernetes tooling and a free trial key for Cosmonic Control:

kubectl- Helm v3.8.0+

- Free trial key for the Cosmonic Control Technical Preview

- Optional:

node- NodeJS runtime andnpm- Node Package Manager (NPM)

Install Local Kubernetes Environment

For the best local Kubernetes development experience, we recommend installing kind and starting a cluster with the following kind-config.yaml configuration, enabling simple local ingress with Envoy:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

# One control plane node and three "workers."

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30950

hostPort: 80

protocol: TCPThe following command downloads the kind-config.yaml from the control-demos repository, starts a cluster, and deletes the config upon completion:

curl -fLO https://raw.githubusercontent.com/cosmonic-labs/control-demos/refs/heads/main/kind-config.yaml && kind create cluster --config=kind-config.yaml && rm kind-config.yamlInstall Cosmonic Control

You'll need a trial license key to follow these instructions. Sign up for Cosmonic Control's free trial to get a key.

Deploy Cosmonic Control to Kubernetes with Helm:

helm install cosmonic-control oci://ghcr.io/cosmonic/cosmonic-control\

--version 0.3.0\

--namespace cosmonic-system\

--create-namespace\

--set envoy.service.type=NodePort\

--set envoy.service.httpNodePort=30950\

--set cosmonicLicenseKey="<insert license here>"Deploy a HostGroup:

helm install hostgroup oci://ghcr.io/cosmonic/cosmonic-control-hostgroup --version 0.3.0 --namespace cosmonic-systemDeploy the MCP Server component:

Deploy the petstore-mcp example:

helm install petstore-mcp --version 0.1.2 oci://ghcr.io/cosmonic-labs/charts/http-trigger -f https://github.com/cosmonic-labs/control-demos/blob/main/petstore-mcp/values.http-trigger.yamlConnect MCP Inspector to the Deployed MCP Server

If you'd like to debug your MCP server, you can start the official MCP model inspector via the following command:

npx @modelcontextprotocol/inspectorConfigure the MCP model inspector's connection:

- Transport Type: Streamable HTTP

- URL:

http://petstore-mcp.localhost.cosmonic.sh/v1/mcp - Connection Type: Via Proxy

You can explore the MCP Server's available tools in the Tools tab and list available resources in the Resources tab.

Conclusion

For information on generating MCP servers like the one we deployed here from OpenAPI schemas, check out our blog, Generate Sandboxed MCP Servers with Wasm Shell and OpenAPI2MCP.

If you'd like to chat about sandboxed MCP server development, Wasm, or wasmCloud, join the Cosmonic team on the wasmCloud Slack or at weekly wasmCloud community meetings. Hope to see you there!